Service Mesh in Traefik Enterprise¶

Traefik Enterprise uses Traefik Mesh to provide service mesh between your services. Traefik Mesh is a lightweight and simpler service mesh that is designed from the ground up to be straightforward and easy to use.

Moreover, Traefik Mesh is opt-in by default, which means that your existing services are unaffected until you decide to add them to the mesh. For more information about Traefik Mesh, please consult its documentation.

Kubernetes only

The service mesh feature is only available for Kubernetes clusters.

Compatibility

The service mesh feature is compatible with open source Kubernetes and commercial distributions from most vendors and cloud providers, including AKS, EKS, GKE, and others. OpenShift is currently not supported, due to its security constraints and internal incompatibilities.

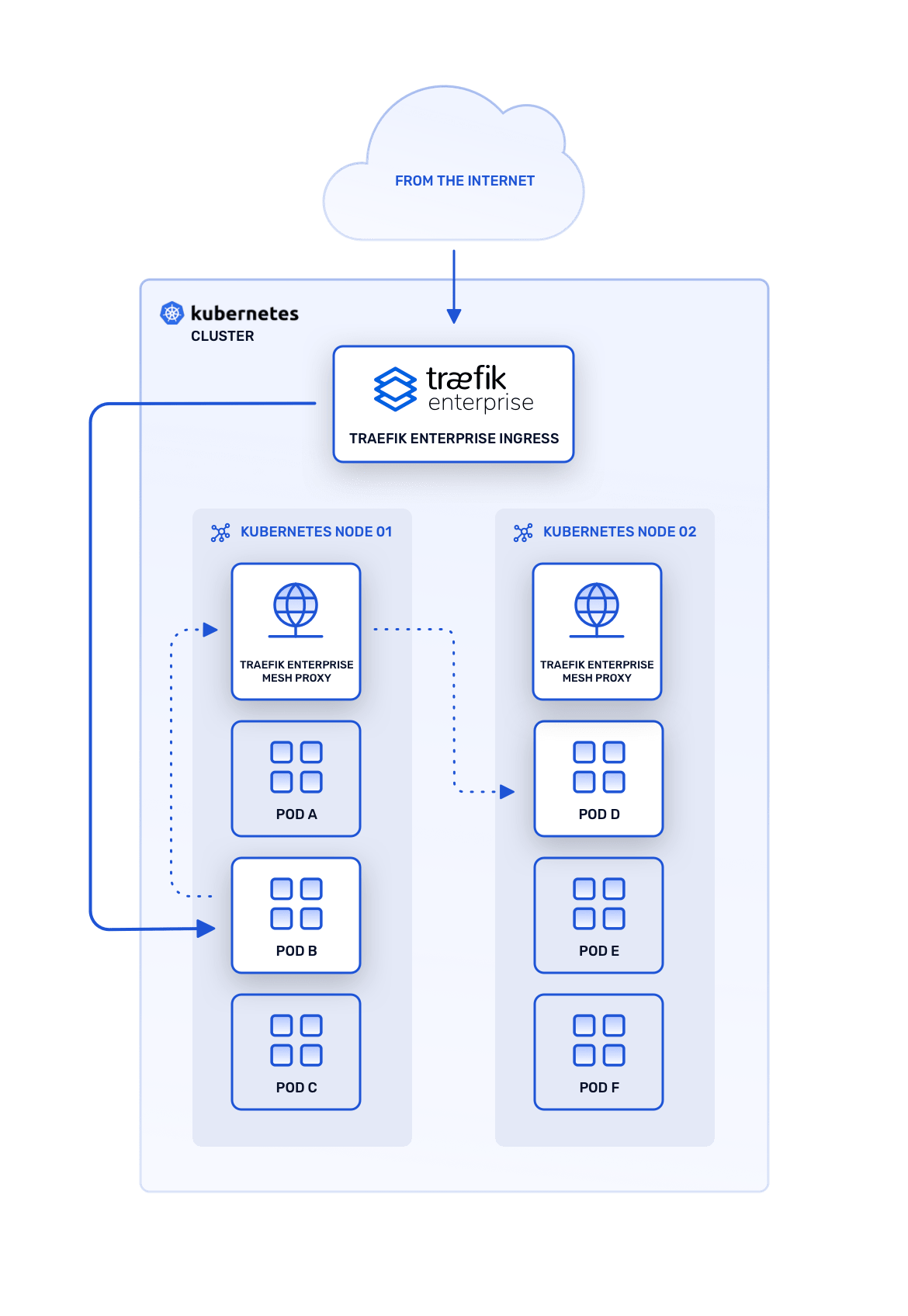

Architecture¶

Traefik Mesh data plane is deployed to every node of a Kubernetes cluster. Each time a Pod sends a request to another one, it actually sends it first to the local mesh proxy instance, which then forwards the request to the destination Pod.

Installing the Service Mesh¶

Service mesh can be installed with Traefik Enterprise by running the teectl setup gen command with the --mesh option, as described in the Kubernetes installation guide. You will then need to also run the teectl setup patch-dns command, which patches your cluster's DNS to add specific rules for the service mesh.

Once the generated installation manifest has been applied to your cluster, you'll also need to enable mesh in your static configuration.

Requests through the Service Mesh¶

Now that service mesh is enabled in your cluster, you can deploy services and make direct requests between your services.

In order to specify the traffic type of a service, add the annotation mesh.traefik.io/traffic-type: "http" to your HTTP services and mesh.traefik.io/traffic-type: "tcp" for the TCP services.

By default, the traffic type is automatically set to the value defined in the defaultMode option of the mesh static configuration (http by default).

Once all of those conditions are met, services can be accessed at servicename.namespace.maesh.

Here is an example using some whoami services and a client Pod:

apiVersion: v1

kind: Namespace

metadata:

name: whoami

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: whoami-server

namespace: whoami

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: whoami-client

namespace: whoami---

kind: Deployment

apiVersion: apps/v1

metadata:

name: whoami

namespace: whoami

spec:

replicas: 2

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

serviceAccount: whoami-server

containers:

- name: whoami

image: traefik/whoami:v1.4.0

imagePullPolicy: IfNotPresent

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: whoami-tcp

namespace: whoami

spec:

replicas: 2

selector:

matchLabels:

app: whoami-tcp

template:

metadata:

labels:

app: whoami-tcp

spec:

serviceAccount: whoami-server

containers:

- name: whoami-tcp

image: traefik/whoamitcp:latest

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: whoami

labels:

app: whoami

# These annotations enable Traefik Mesh for this service:

annotations:

mesh.traefik.io/traffic-type: "http"

mesh.traefik.io/retry-attempts: "2"

spec:

type: ClusterIP

ports:

- port: 80

name: whoami

selector:

app: whoami

---

apiVersion: v1

kind: Service

metadata:

name: whoami-tcp

namespace: whoami

labels:

app: whoami-tcp

# These annotations enable Traefik Mesh for this service:

annotations:

mesh.traefik.io/traffic-type: "tcp"

spec:

type: ClusterIP

ports:

- port: 8080

name: whoami-tcp

selector:

app: whoami-tcp

---

apiVersion: v1

kind: Pod

metadata:

name: whoami-client

namespace: whoami

spec:

serviceAccountName: whoami-client

containers:

- name: whoami-client

image: giantswarm/tiny-tools:3.9

command:

- "sleep"

- "3600"Once you apply those files, you should see the following when running kubectl get all -n whoami:

NAME READY STATUS RESTARTS AGE

pod/whoami-client 1/1 Running 0 11s

pod/whoami-f4cbd7f9c-lddgq 1/1 Running 0 12s

pod/whoami-f4cbd7f9c-zk4rb 1/1 Running 0 12s

pod/whoami-tcp-7679bc465-ldlt2 1/1 Running 0 12s

pod/whoami-tcp-7679bc465-wf87n 1/1 Running 0 12s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/whoami ClusterIP 100.68.109.244 <none> 80/TCP 13s

service/whoami-tcp ClusterIP 100.68.73.211 <none> 8080/TCP 13s

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/whoami 2 2 2 2 13s

deployment.apps/whoami-tcp 2 2 2 2 13s

NAME DESIRED CURRENT READY AGE

replicaset.apps/whoami-f4cbd7f9c 2 2 2 13s

replicaset.apps/whoami-tcp-7679bc465 2 2 2 13sYou should now be able to make requests between your services. Here is an example to test HTTP traffic:

kubectl -n whoami exec whoami-client -- curl -s whoami.whoami.maeshHostname: whoami-84bdf87956-gvbm8

IP: 127.0.0.1

IP: 5.6.7.8

RemoteAddr: 1.2.3.4:12345

GET / HTTP/1.1

Host: whoami.whoami.maesh

User-Agent: curl/7.64.0

Accept: */*Finally, for TCP traffic, you can use netcat and send some data:

kubectl -n whoami exec -ti whoami-client -- nc whoami-tcp.whoami.maesh 8080

my dataReceived: my dataConfiguration¶

You can configure Traefik Enterprise's service mesh in the static configuration and configure how your services integrate within the mesh using the dynamic configuration.

Static Configuration¶

Here is an example of what you can do with the static configuration for your service mesh:

# Traefik Enterprise configuration example

providers:

kubernetesCRD:

log:

level: INFO

api:

dashboard: true

# Service Mesh configuration

mesh:

acl: true

ignoreNamespaces:

- mysecretnamespace

- myothersecretnamespace

defaultMode: http

tracing:

serviceName: myservice

jaeger:

samplingServerURL: http://jaeger-agent.traefikee.svc.cluster.local:5778/sampling

samplingType: foobar

collector:

endpoint: http://jaeger-collector.traefikee.svc.cluster.local:14268/api/traces?format=jaeger.thrift

metrics:

prometheus: {}[providers]

[log]

level = "INFO"

[api]

dashboard = true

[mesh]

acl = true

ignoreNamespaces = [

"mysecretnamespace",

"myothersecretnamespace"

]

defaultMode = "http"

[mesh.tracing]

serviceName = "myservice"

[mesh.tracing.jaeger]

samplingServerURL = "http://jaeger-agent.traefikee.svc.cluster.local:5778/sampling"

samplingType = "foobar"

[mesh.tracing.jaeger.collector]

endpoint = "http://jaeger-collector.traefikee.svc.cluster.local:14268/api/traces?format=jaeger.thrift"

[mesh.metrics]

[mesh.metrics.prometheus]In this example, we:

- Enable the ACL mode, which forbids all traffic unless explicitly authorized using the SMI TrafficTarget resource.

- Tells the service mesh to use HTTP as the default traffic type for services within the mesh.

- Let the service mesh know which namespaces to ignore within the cluster.

- Expose service mesh tracing to Jaeger.

- Expose service mesh metrics to Prometheus on port 8080.

Dynamic Configuration¶

Traefik Mesh discovers your Kubernetes resources and automatically builds its configuration from the topology of your Services, Pods, Deployments and TrafficSplits.

Your services can be annotated, as described here, to enable specific features in the service mesh, including rate limiting, circuit breaker, and other middlewares.

Advanced Configuration¶

As shown in the aforementioned example configuration, it is possible to expose metrics and tracing from your service mesh. Supported metrics and tracing integrations are listed in Traefik Proxy's documentation pages for Metrics and Tracing.

The configuration is identical to Traefik Proxy's metrics and tracing configuration, with the exception that it has to be part of the mesh static configuration in Traefik Enterprise: