AI Gateway

AI Gateway is a service-based solution integrated within Traefik Hub, designed to simplify the management and integration of multiple Large Language Model (LLM) providers.

It offers a unified API to connect with various AI services, centralizing configuration, security, and observability for enterprise-grade AI deployments through a single, secure gateway. Additionally, it can be combined with all existing middleware to suit different use cases, ensuring that it serves as a versatile building block that complements and enhances your current infrastructure.

Key features and benefits

- Unified AI API Access: Connect to various AI providers through a single API, simplifying integration and reducing complexity.

- Load Balancing Across Multiple AIs: Distribute requests among different AI providers to optimize performance and reliability.

- Secure Credential Management: Manage API keys and tokens in a central location, ensuring they are not exposed to end-users and can be rotated seamlessly.

- Centralized Governance: Implement uniform policies for authentication, authorization, and rate limiting across all AI services.

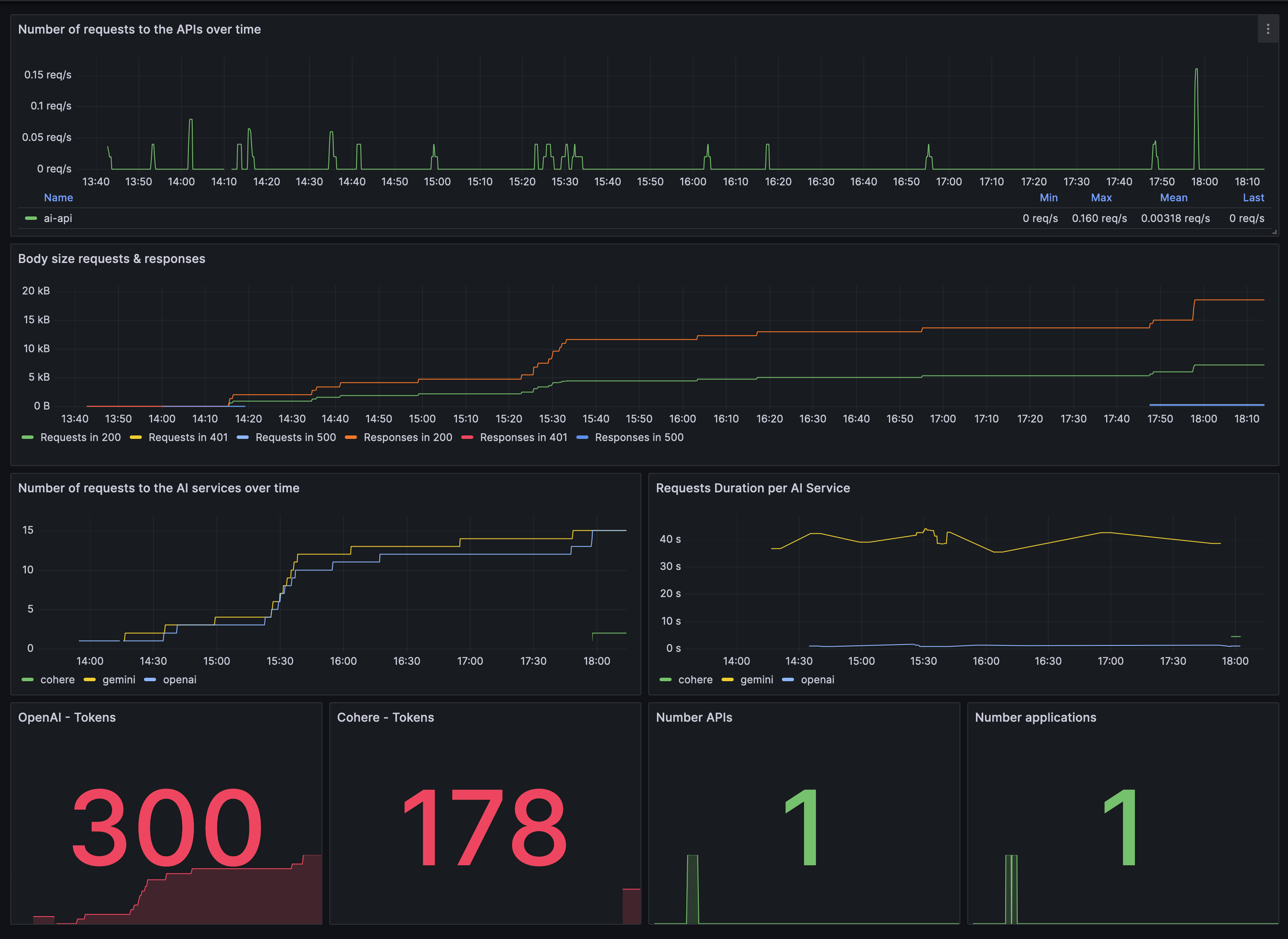

- Enhanced Observability with OpenTelemetry: Gain comprehensive insights into AI operations through standardized usage metrics.

- Avoids Vendor Lock-In: Seamlessly switch between AI providers without altering client applications, promoting flexibility and future-proofing AI strategies.

Supported AI providers

The Traefik Hub AI Gateway currently supports the following AI providers:

- Anthropic

- AzureOpenAI

- Bedrock

- Cohere

- DeepSeek

- Gemini

- Ollama

- OpenAI

- Mistral

- Qwen

Creating an AI gateway with the AIService CRD

- Enable the AI gateway feature by upgrading your Traefik hub deployment

helm upgrade traefik -n traefik --wait \

--reuse-values \

--set hub.experimental.aigateway=true \

traefik/traefik

The AI Gateway feature is currently marked as experimental. However, it is fully functional and ready for use, and we are committed to maintaining and enhancing this feature. Due to the fast-paced advancements in the AI space, the API may change in future releases to accommodate new developments. We recommend staying updated with the latest documentation to take full advantage of upcoming improvements.

- Define & apply an

AIServiceresource with any of the supported AI providers. For this example, we will be using the OpenAI provider.

apiVersion: hub.traefik.io/v1alpha1

kind: AIService

metadata:

name: ai-openai

namespace: traefik

spec:

openai:

baseURL: "YOUR_BASE_URL"

token: "YOUR_OPENAI_TOKEN"

model: "o1-preview"

The baseURL key is optional and should only be used if your AI provider is compatible with OpenAI API. It allows OpenAI-compatible AIs to integrate without requiring new configuration.

See the AIService reference page for more details.

- Attach the

ai-openaiAIServicewe created above to an IngressRoute as aTraefikService

- IngressRoute

- IngressRoute with API Management Enabled

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: ai-test

namespace: traefik

spec:

routes:

- kind: Rule

match: Host(`ai.localhost`)

services:

- kind: TraefikService

name: traefik-ai-openai@ai-gateway-service

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

annotations:

hub.traefik.io/api: "ai@traefik"

name: ai-test

namespace: traefik

spec:

routes:

- kind: Rule

match: Host(`ai.localhost`)

services:

- kind: TraefikService

name: traefik-ai-openai@ai-gateway-service

- To define your

AIServicename in an IngressRoute, use the following format:namespace-ai-service-name@ai-gateway-service - If you have API management enabled you can reference your API using an annotation in the following format :

api-name@namespace

- Make a request to the

AIService

The example below makes a request to the OpenAI o1-preview model.

- Request

- Response

curl -d '{

"messages": [

{

"role": "user",

"content": "tell me a joke"

}

]

}' http://ai.localhost

{

{

"id": "chatcmpl-AaXqZLqy082BZ81TNICriIzjqvrOD",

"object": "chat.completion",

"created": 1733273279,

"model": "o1-preview-2024-09-12",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Sure! Here's a joke for you:\n\n**Why don't scientists trust atoms?**\n\nBecause they make up everything!"

},

"finish_reason": "stop",

"content_filter_results": {

"hate": {

"filtered": false

},

"self_harm": {

"filtered": false

},

"sexual": {

"filtered": false

},

"violence": {

"filtered": false

},

"jailbreak": {

"filtered": false,

"detected": false

},

"profanity": {

"filtered": false,

"detected": false

}

}

}

],

"usage": {

"prompt_tokens": 32,

"completion_tokens": 419,

"total_tokens": 451,

"prompt_tokens_details": {

"audio_tokens": 0,

"cached_tokens": 0

},

"completion_tokens_details": {

"audio_tokens": 0,

"reasoning_tokens": 384

}

},

"system_fingerprint": "fp_e76890f0c3"

}

For a comprehensive list of configuration examples & options available for each supported provider, please refer to the AI Gateway reference documentation

Observability and monitoring

The Traefik Hub AI Gateway integrates with OpenTelemetry to provide comprehensive usage metrics tailored for Generative AI operations. This allows you to monitor token usage, operation durations, and overall system performance.

See the Metrics page for more information.

Frequently asked questions

-

How do I rotate API tokens without downtime?

To rotate API tokens, update the

tokenorapiKeyfield in the correspondingAIServiceresource. Traefik AI Gateway will automatically use the new credentials without requiring changes to client applications. -

Can I monitor AI service performance?

Yes, Traefik AI Gateway integrates with OpenTelemetry to provide detailed metrics on token usage and operation durations. You can visualize these metrics using monitoring tools like Prometheus and Grafana.

grafana.com

Related content

- Learn more about the AI Gateway in its reference documentation.

- Learn more about Traefik Service in its dedicated section.

- Learn more about IngressRoute in its dedicated section.